GPyTorch

GPU Acceleration for Gaussian Processes

This is a talk I gave at the Berlin Machine Learning Seminar hosted by Ben in the offices of Lateral.

Gaussian Processes are a class of popular and powerful probabilistic models which can be derived straight from a Normal distribution. While vanilla GP’s are very flexible due to their nonparametric formulation, inference and training both rely on the evaluation of the entire data set through several kernel matrices, their products, log determinants and inverses.

These kernel matrices over the entire training data set and their numerous use in matrix matrix pdocuts, log determinants and inverses result in poor scalability to large data sets. The advent of GPU’s in machine learning has offered the possibility of computing matrix vector and matrix matrix products efficiently and in parallel.

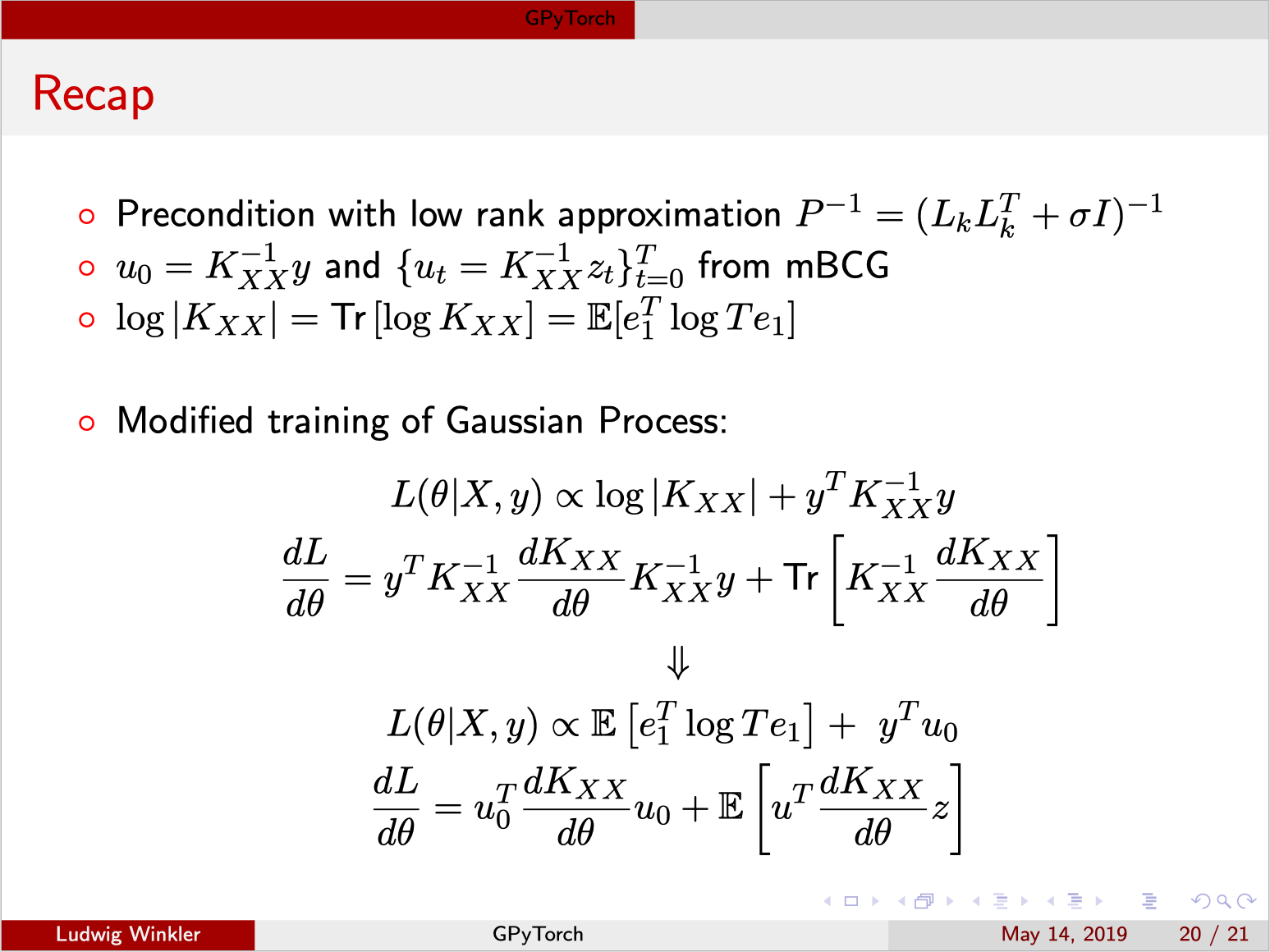

GPyTorch introduces several randomized and parallelized algorithms as replacements for exact computations which reduce the complexity of inference and training in GP’s. These include stochastic approximations of the trace, parallel conjugate gradient descent and several efficient applications of eigendecompositions.

More importantly, all of these adaptations allow full utilization of parallel hardware during inference and training. Considerable focus will be on conjugated gradient descent which allows the efficient optimization of quadratic optimization problems through the use of line search and conjugate search directions.

The core idea of the original paper is to exploit a parallel version of conjugate gradient descent to the fullest such that all relevant terms for the cost function and the gradients of the kernels can be obtained from a single modified batch conjugate descent run.

The talk can be downloaded here.